Mathematical optimization

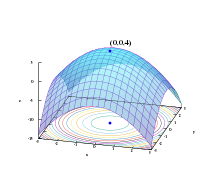

Optimization problems arise in all quantitative disciplines from computer science and engineering[3] to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries.The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics.Here are some examples: Consider the following notation: This denotes the minimum value of the objective function x2 + 1, when choosing x from the set of real numbersSimilarly, the notation asks for the maximum value of the objective function 2x, where x may be any real number.Fermat and Lagrange found calculus-based formulae for identifying optima, while Newton and Gauss proposed iterative methods for moving towards an optimum.The term "linear programming" for certain optimization cases was due to George B. Dantzig, although much of the theory had been introduced by Leonid Kantorovich in 1939.Dantzig published the Simplex algorithm in 1947, and also John von Neumann and other researchers worked on the theoretical aspects of linear programming (like the theory of duality) around the same time.In other words, defining the problem as multi-objective optimization signals that some information is missing: desirable objectives are given but combinations of them are not rated relative to each other.Classical optimization techniques due to their iterative approach do not perform satisfactorily when they are used to obtain multiple solutions, since it is not guaranteed that different solutions will be obtained even with different starting points in multiple runs of the algorithm.More generally, they may be found at critical points, where the first derivative or gradient of the objective function is zero or is undefined, or on the boundary of the choice set.The maximum theorem of Claude Berge (1963) describes the continuity of an optimal solution as a function of underlying parameters.There exist efficient numerical techniques for minimizing convex functions, such as interior-point methods.The first and still popular method for ensuring convergence relies on line searches, which optimize a function along one dimension.The iterative methods used to solve problems of nonlinear programming differ according to whether they evaluate Hessians, gradients, or only function values.The derivatives provide detailed information for such optimizers, but are even harder to calculate, e.g. approximating the gradient takes at least N+1 function evaluations.For approximations of the 2nd derivatives (collected in the Hessian matrix), the number of function evaluations is in the order of N².List of some well-known heuristics: Problems in rigid body dynamics (in particular articulated rigid body dynamics) often require mathematical programming techniques, since you can view rigid body dynamics as attempting to solve an ordinary differential equation on a constraint manifold;[11] the constraints are various nonlinear geometric constraints such as "these two points must always coincide", "this surface must not penetrate any other", or "this point must always lie somewhere on this curve".The Journal of Economic Literature codes classify mathematical programming, optimization techniques, and related topics under JEL:C61-C63.The most common civil engineering problems that are solved by optimization are cut and fill of roads, life-cycle analysis of structures and infrastructures,[28] resource leveling,[29][30] water resource allocation, traffic management[31] and schedule optimization.These algorithms run online and repeatedly determine values for decision variables, such as choke openings in a process plant, by iteratively solving a mathematical optimization problem including constraints and a model of the system to be controlled.Given a set of geophysical measurements, e.g. seismic recordings, it is common to solve for the physical properties and geometrical shapes of the underlying rocks and fluids.

Mathematical ProgrammingOptimization (disambiguation)Optimum (disambiguation)maximumSimionescu's functiondiscrete optimizationcontinuous optimizationcomputer scienceengineeringoperations researcheconomicsmathematicsoptimization problemmaximizing or minimizingreal functionapplied mathematicsvariablescontinuousdiscreteobjectintegerpermutationcountable setconstrained problemsfunctionreal numberscomputer programminglinear programmingphysicsenergysystemmodeledmachine learningcost functionsubsetEuclidean spaceconstraintsdomaincandidate solutionsloss functionfunctionalglobal minimumconvexconvex problemGlobal optimizationnumerical analysisinfinityundefinedArg maxargumentintervalfeasible setintegersFermatLagrangeNewtonGeorge B. DantzigLeonid KantorovichUnited StateslogisticsSimplex algorithmJohn von NeumannRichard BellmanDimitri BertsekasMichel BierlaireStephen P. BoydRoger FletcherMartin GrötschelRonald A. HowardFritz JohnNarendra KarmarkarWilliam KarushLeonid KhachiyanBernard KoopmanHarold KuhnLászló LovászDavid LuenbergerArkadi NemirovskiYurii NesterovLev PontryaginR. Tyrrell RockafellarNaum Z. ShorAlbert TuckerConvex programmingconcavepolyhedronpolytopeboundedSecond-order cone programmingSemidefinite programmingsemidefinitematricesConic programmingGeometric programmingposynomialsmonomialsInteger programmingQuadratic programmingFractional programmingNonlinear programmingStochastic programmingrandom variablesRobust optimizationCombinatorial optimizationStochastic optimizationInfinite-dimensional optimizationdimensionalHeuristicsmetaheuristicsConstraint satisfactionartificial intelligenceautomated reasoningConstraint programmingSpace mappingsurrogate modelCalculus of variationsOptimal controlDynamic programmingBellman equationMathematical programming with equilibrium constraintsvariational inequalitiescomplementaritiesMulti-objective optimizationPareto setPareto frontiervector optimization