Supercomputer

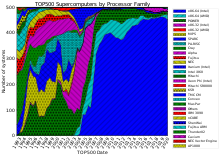

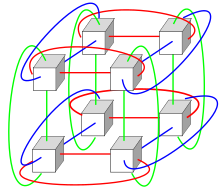

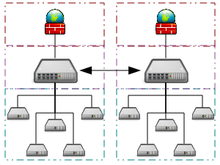

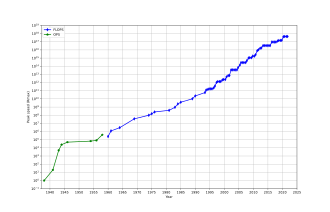

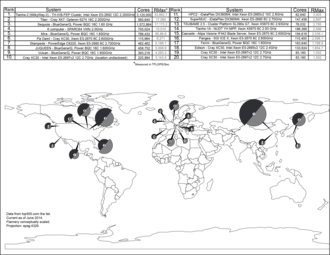

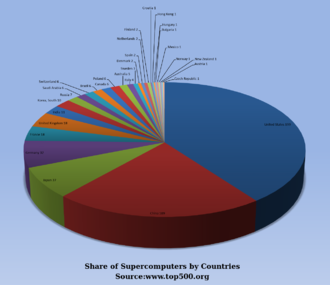

[6] Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers.This machine was the first realized example of a true massively parallel computer, in which many processors worked together to solve different parts of a single larger problem."[26] But by the early 1980s, several teams were working on parallel designs with thousands of processors, notably the Connection Machine (CM) that developed from research at MIT.Several updated versions followed; the CM-5 supercomputer is a massively parallel processing computer capable of many billions of arithmetic operations per second.[28] Fujitsu's VPP500 from 1992 is unusual since, to achieve higher speeds, its processors used GaAs, a material normally reserved for microwave applications due to its toxicity.The Paragon was a MIMD machine which connected processors via a high speed two-dimensional mesh, allowing processes to execute on separate nodes, communicating via the Message Passing Interface.Similar designs using custom hardware were made by many companies, including the Evans & Sutherland ES-1, MasPar, nCUBE, Intel iPSC and the Goodyear MPP.By the turn of the 21st century, designs featuring tens of thousands of commodity CPUs were the norm, with later machines adding graphic units to the mix.[36] While at the University of New Mexico, Bader sought to build a supercomputer running Linux using consumer off-the-shelf parts and a high-speed low-latency interconnection network.The prototype utilized an Alta Technologies "AltaCluster" of eight dual, 333 MHz, Intel Pentium II computers running a modified Linux kernel.[37][38] Though Linux-based clusters using consumer-grade parts, such as Beowulf, existed prior to the development of Bader's prototype and RoadRunner, they lacked the scalability, bandwidth, and parallel computing capabilities to be considered "true" supercomputers.[42][43] As the price, performance and energy efficiency of general-purpose graphics processing units (GPGPUs) have improved, a number of petaFLOPS supercomputers such as Tianhe-I and Nebulae have started to rely on them.Heat management is a major issue in complex electronic devices and affects powerful computer systems in various ways.In the most common scenario, environments such as PVM and MPI for loosely connected clusters and OpenMP for tightly coordinated shared memory machines are used.Significant effort is required to optimize an algorithm for the interconnect characteristics of the machine it will be run on; the aim is to prevent any of the CPUs from wasting time waiting on data from other nodes.Grid computing has been applied to a number of large-scale embarrassingly parallel problems that require supercomputing performance scales.As of February 2017[update], BOINC recorded a processing power of over 166 petaFLOPS through over 762 thousand active Computers (Hosts) on the network.The Penguin On Demand (POD) cloud is a bare-metal compute model to execute code, but each user is given virtualized login node.[102] Architectures that lend themselves to supporting many users for routine everyday tasks may have a lot of capacity but are not typically considered supercomputers, given that they do not solve a single very complex problem.[102] In general, the speed of supercomputers is measured and benchmarked in FLOPS (floating-point operations per second), and not in terms of MIPS (million instructions per second), as is the case with general-purpose computers.However, The performance of a supercomputer can be severely impacted by fluctuation brought on by elements like system load, network traffic, and concurrent processes, as mentioned by Brehm and Bruhwiler (2015).[105] The FLOPS measurement is either quoted based on the theoretical floating point performance of a processor (derived from manufacturer's processor specifications and shown as "Rpeak" in the TOP500 lists), which is generally unachievable when running real workloads, or the achievable throughput, derived from the LINPACK benchmarks and shown as "Rmax" in the TOP500 list.The same research group also succeeded in using a supercomputer to simulate a number of artificial neurons equivalent to the entirety of a rat's brain.The National Oceanic and Atmospheric Administration uses supercomputers to crunch hundreds of millions of observations to help make weather forecasts more accurate.[128] Erik P. DeBenedictis of Sandia National Laboratories has theorized that a zettaFLOPS (1021 or one sextillion FLOPS) computer is required to accomplish full weather modeling, which could cover a two-week time span accurately.The next step for microprocessors may be into the third dimension; and specializing to Monte Carlo, the many layers could be identical, simplifying the design and manufacture process.Supercomputing facilities were constructed to efficiently remove the increasing amount of heat produced by modern multi-core central processing units.Located at the Thor Data Center in Reykjavík, Iceland, this supercomputer relies on completely renewable sources for its power rather than fossil fuels.[134] Examples of supercomputers in fiction include HAL 9000, Multivac, The Machine Stops, GLaDOS, The Evitable Conflict, Vulcan's Hammer, Colossus, WOPR, AM, and Deep Thought.

Supercomputer (disambiguation)Blue Gene/PArgonne National Laboratory3D torus networkcomputerfloating-pointmillion instructions per secondexascale supercomputersworld's fastest 500 supercomputersEuropean Unioncomputational sciencequantum mechanicsweather forecastingclimate researchoil and gas explorationmolecular modelingmacromoleculesaerodynamicsnuclear weaponsnuclear fusioncryptanalysisSeymour CrayControl Data CorporationCray Researchparallelismprocessorsvector processorsCray-1massively parallelEl CapitanHistory of supercomputingDeutsches MuseumUNIVACLivermore Atomic Research Computerdrum memorydisk driveIBM 7030 StretchLos Alamos National LaboratorytransistorspipelinedIBM 7950 HarvestUniversity of ManchesterTom KilburnAtlas Supervisorswappedtime-sharingFerrantiManchester UniversityCDC 6600germaniumsiliconCray-2central processing unitsliquid coolingFluorinertsupercomputer architecturegigaFLOPSParallel computer hardwareBlue GenebladesILLIAC IVConnection MachinemicroprocessorsnetworkOsaka UniversityLINKS-1 Computer Graphics SystemZilog Z8001control processorsfloating-point processors3D computer graphicsFujitsuNumerical Wind TunnelgigaFLOPS (GFLOPS)Hitachi SR2201crossbarIntel ParagonIntel i860Message Passing InterfaceEvans & Sutherland ES-1MasParIntel iPSCGoodyear MPPgraphic unitsDavid BaderBeowulfTOP500torus interconnectgrid computingcomputer clusterInfinibandtorus interconnectsmulti-core processorsCyclops64energy efficiencygeneral-purpose graphics processing unitspetaFLOPSTianhe-INebulaeK computerGPGPUsJaguarGyoukouliquid immersion coolingDeep BlueGravity PipeMDGRAPE-3Deep CrackcipherComputer coolingGreen500Summitheat densityair conditioningTianhe-1Amegawattsthermal design powerCPU power dissipationgreen computingliquid cooledSystem XLiebert companyPower 775AquasarFLOPS per wattRoadrunnerMFLOPS/W