Basis (linear algebra)

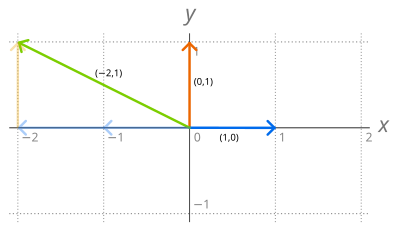

The coefficients of this linear combination are referred to as components or coordinates of the vector with respect to B.[1] In other words, a basis is a linearly independent spanning set.Basis vectors find applications in the study of crystal structures and frames of reference.A basis B of a vector space V over a field F (such as the real numbers R or the complex numbers C) is a linearly independent subset of V that spans V. This means that a subset B of V is a basis if it satisfies the two following conditions: The scalarsare called the coordinates of the vector v with respect to the basis B, and by the first property they are uniquely determined.In this case, the finite subset can be taken as B itself to check for linear independence in the above definition.In this case, the ordering is necessary for associating each coefficient to the corresponding basis element.The set R2 of the ordered pairs of real numbers is a vector space under the operations of component-wise additionof n-tuples of elements of F is a vector space for similarly defined addition and scalar multiplication.If F is a field, the collection F[X] of all polynomials in one indeterminate X with coefficients in F is an F-vector space.Many properties of finite bases result from the Steinitz exchange lemma, which states that, for any vector space V, given a finite spanning set S and a linearly independent set L of n elements of V, one may replace n well-chosen elements of S by the elements of L to get a spanning set containing L, having its other elements in S, and having the same number of elements as S. Most properties resulting from the Steinitz exchange lemma remain true when there is no finite spanning set, but their proofs in the infinite case generally require the axiom of choice or a weaker form of it, such as the ultrafilter lemma.The formula can be proven by considering the decomposition of the vector x on the two bases: one hasThe change-of-basis formula results then from the uniqueness of the decomposition of a vector over a basis, hereLike for vector spaces, a basis of a module is a linearly independent subset that is also a generating set.A major difference with the theory of vector spaces is that not every module has a basis.A module over the integers is exactly the same thing as an abelian group.Free abelian groups have specific properties that are not shared by modules over other rings.This is to make a distinction with other notions of "basis" that exist when infinite-dimensional vector spaces are endowed with extra structure.The common feature of the other notions is that they permit the taking of infinite linear combinations of the basis vectors in order to generate the space.The completeness as well as infinite dimension are crucial assumptions in the previous claim.In the study of Fourier series, one learns that the functions {1} ∪ { sin(nx), cos(nx) : n = 1, 2, 3, ... } are an "orthogonal basis" of the (real or complex) vector space of all (real or complex valued) functions on the interval [0, 2π] that are square-integrable on this interval, i.e., functions f satisfyingfor suitable (real or complex) coefficients ak, bk.Every Hamel basis of this space is much bigger than this merely countably infinite set of functions.For a probability distribution in Rn with a probability density function, such as the equidistribution in an n-dimensional ball with respect to Lebesgue measure, it can be shown that n randomly and independently chosen vectors will form a basis with probability one, which is due to the fact that n linearly dependent vectors x1, ..., xn in Rn should satisfy the equation det[x1 ⋯ xn] = 0 (zero determinant of the matrix with columns xi), and the set of zeros of a non-trivial polynomial has zero measure.[6][7] It is difficult to check numerically the linear dependence or exact orthogonality.for sufficiently big n. This property of random bases is a manifestation of the so-called measure concentration phenomenon.[8] The figure (right) illustrates distribution of lengths N of pairwise almost orthogonal chains of vectors that are independently randomly sampled from the n-dimensional cube [−1, 1]n as a function of dimension, n. A point is first randomly selected in the cube.As X is nonempty, and every totally ordered subset of (X, ⊆) has an upper bound in X, Zorn's lemma asserts that X has a maximal element.This proof relies on Zorn's lemma, which is equivalent to the axiom of choice.Conversely, it has been proved that if every vector space has a basis, then the axiom of choice is true.

mathematicsvector spacelinear combinationlinearly independentspanning setdimensioncrystal structuresframes of referencereal numberscomplex numberssubsetfinitescalarsfinite-dimensionalorderingorientationsequenceindexed familystandard basislinearly dependentordered pairsn-tuplespolynomial ringspolynomialsindeterminatemonomial basismonomialsBernstein basis polynomialsChebyshev polynomialspolynomial sequenceSteinitz exchange lemmaaxiom of choiceultrafilter lemmaempty setcardinalitydimension theoremproper subsetindexingoriginCartesian frameaffine framelinear isomorphismcoordinate spacecoordinate vectorinverse imagecanonical basisChange of basisexpressionsmatrixcolumn vectorsFree moduleFree abelian groupmodulelinear independencespanning setsgenerating setfree resolutionsabelian groupGeorg Hamelorthogonal basesHilbert spacesSchauder basesMarkushevich basesnormed linear spacescardinal numberaleph-noughttopological vector spacesBanach spacesFréchet spacescompleteBanach spaceuncountableBaire category theoremsequencesFourier seriesorthonormal basesFourier analysisaffine spaceprojective spaceconvex setgeneral linear positionpolytopeconvex hullHilbert basis (linear programming)probability distributionprobability density functionwith probability onespaces with inner productindependent and identically distributedpartially orderedZorn's lemmaBasis of a matroidBasis of a linear programFrame of a vector spaceSpherical basisHalmos, Paul RichardAequationes MathematicaeGorban, Alexander N.Information SciencesArtstein, ShiriIsrael Journal of MathematicsCiteSeerXAmerican Mathematical SocietyLang, SergeSpringer-VerlagBanach, StefanFundamenta MathematicaeBolzano, BernardBourbaki, NicolasHistoria MathematicaFourier, Jean Baptiste JosephGrassmann, HermannHamel, GeorgHamilton, William RowanMöbius, August FerdinandPeano, GiuseppeYouTubeEncyclopedia of MathematicsEMS PressLinear algebraOutlineGlossaryScalarVectorScalar multiplicationVector projection