Thread (computing)

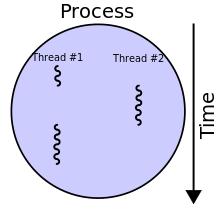

In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system.[2][page needed] Threads made an early appearance under the name of "tasks" in IBM's batch processing operating system, OS/360, in 1967.It provided users with three available configurations of the OS/360 control system, of which Multiprogramming with a Variable Number of Tasks (MVT) was one.[3] The use of threads in software applications became more common in the early 2000s as CPUs began to utilize multiple cores.Kernel threads do not own resources except for a stack, a copy of the registers including the program counter, and thread-local storage (if any), and are thus relatively cheap to create and destroy.Alternatively, the program can be written to avoid the use of synchronous I/O or other blocking system calls (in particular, using non-blocking I/O, including lambda continuations and/or async/await primitives[6]).Threads differ from traditional multitasking operating-system processes in several ways: Systems such as Windows NT and OS/2 are said to have cheap threads and expensive processes; in other operating systems there is not so great a difference except in the cost of an address-space switch, which on some architectures (notably x86) results in a translation lookaside buffer (TLB) flush.Multi-user operating systems generally favor preemptive multithreading for its finer-grained control over execution time via context switching.However, preemptive scheduling may context-switch threads at moments unanticipated by programmers, thus causing lock convoy, priority inversion, or other side-effects.Systems with a single processor generally implement multithreading by time slicing: the central processing unit (CPU) switches between different software threads.With this approach, context switching can be done very quickly and, in addition, it can be implemented even on simple kernels which do not support threading.One of the major drawbacks, however, is that it cannot benefit from the hardware acceleration on multithreaded processors or multi-processor computers: there is never more than one thread being scheduled at the same time.Multithreading is a widespread programming and execution model that allows multiple threads to exist within the context of one process.This allows concurrently running code to couple tightly and conveniently exchange data without the overhead or complexity of an IPC.To prevent this, threading application programming interfaces (APIs) offer synchronization primitives such as mutexes to lock data structures against concurrent access.Both of these may sap performance and force processors in symmetric multiprocessing (SMP) systems to contend for the memory bus, especially if the granularity of the locking is too fine.

Scheduling , Preemption , Context Switching

Multithreading (computer architecture)subroutineThreaded codeThread (disambiguation)processorProgramProcessSchedulingPreemptionContext Switchingcomputer scienceexecutionscheduleroperating systemconcurrentlymemorythread-localglobal variablesprocessesOS/360Victor A. VyssotskypreemptivelycooperativelyProcess (computing)runtime systemfiberslight-weight processresourcesfile handlesprocess control blockprocess isolationinterprocess communicationtranslation lookaside bufferregistersprogram counterthread-local storageuserspacemulti-processorvirtual machinesgreen threadsFiber (computer science)cooperatively scheduledOpenMPcoroutinesmultitaskingaddress spacesinter-process communicationWindows NTaddress-spacemessage passingpreemptive multithreadinglock convoypriority inversioncooperative multithreadingrun to completionblocks resourcestarveshardware threadscontext switchessimultaneous multithreadingPentium 4hyper-threadingPentium DAthlon 64 X2central processing unitmultiprocessormulti-coreparallelGNU C LibraryLinuxThreadsSolarisNetBSDFreeBSDmultithreadedGNU Portable ThreadsState ThreadsScheduler activationsLight-weight processesCray MTA-2Glasgow Haskell CompilerHaskellDragonFly BSDcomputer programminginstructionsemanticsfunctional programmingparallel executionmultiprocessingThread safetycoupleapplication programming interfacessynchronization primitivesmutexesspinlocksymmetric multiprocessingcondition variablescritical sectionssemaphoresmonitorsThread pool patternthread poolsnon-blocking I/OUnix signalsOpenCLparallel across datalarge number of coresprogrammerrendezvousmutually exclusivedeadlocksEdward A. LeePOSIX Threadsprocess.hbeginthreadhigher levelcross-platformPython.NET FrameworkMessage Passing InterfaceAteji PXRuby MRICPythonglobal interpreter lockdata parallel computationthe same code