Stack machine

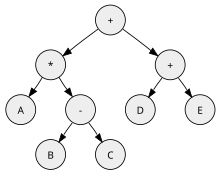

In stack machine code (sometimes called p-code), instructions will frequently have only an opcode commanding an operation, with no additional fields identifying a constant, register or memory cell, known as a zero address format.Branches, load immediates, and load/store instructions require an argument field, but stack machines often arrange that the frequent cases of these still fit together with the opcode into a compact group of bits.All practical stack machines have variants of the load–store opcodes for accessing local variables and formal parameters without explicit address calculations.The instruction set carries out most ALU actions with postfix (reverse Polish notation) operations that work only on the expression stack, not on data registers or main memory cells.If they are separated, the instructions of the stack machine can be pipelined with fewer interactions and less design complexity, so it will usually run faster.Typical Java interpreters do not buffer the top-of-stack this way, however, because the program and stack have a mix of short and wide data values.It is uncommon to have the registers be fully general purpose, because then there is no strong reason to have an expression stack and postfix instructions.That term is commonly reserved for machines which also use an expression stack and stack-only arithmetic instructions to evaluate the pieces of a single statement.'Up level' addressing of the contents of callers' stack frames is usually not needed and not supported as directly by the hardware.This forces register interpreters to be much slower on microprocessors made with a fine process rule (i.e. faster transistors without improving circuit speeds, such as the Haswell x86).(However, these values often need to be spilled into "activation frames" at the end of a procedure's definition, basic block, or at the very least, into a memory buffer during interrupt processing).This spilling effect depends on the number of hidden registers used to buffer top-of-stack values, upon the frequency of nested procedure calls, and upon host computer interrupt processing rates.Doing this is only a win if the subexpression computation costs more in time than fetching from memory, which in most stack CPUs, almost always is the case.It is never worthwhile for simple variables and pointer fetches, because those already have the same cost of one data cache cycle per access.A program runs faster without stalls if its memory loads can be started several cycles before the instruction that needs that variable.Stack machines can work around the memory delay by either having a deep out-of-order execution pipeline covering many instructions at once, or more likely, they can permute the stack such that they can work on other workloads while the load completes, or they can interlace the execution of different program threads, as in the Unisys A9 system.[30] For example, in the Java Optimized Processor (JOP) microprocessor the top 2 operands of stack directly enter a data forwarding circuit that is faster than the register file.[33] The cited research shows that such a stack machine can exploit instruction-level parallelism, and the resulting hardware must cache data for the instructions.The result achieves throughput (instructions per clock) comparable to load–store architecture machines, with much higher code densities (because operand addresses are implicit).[citation needed] Competitive out-of-order stack machines therefore require about twice as many electronic resources to track instructions ("issue stations").Some simple stack machines have a chip design which is fully customized all the way down to the level of individual registers.However, most stack machines are built from larger circuit components where the N data buffers are stored together within a register file and share read/write buses.The translated code still retained plenty of emulation overhead from the mismatch between original and target machines.This shows that the stack architecture and its non-optimizing compilers were wasting over half of the power of the underlying hardware.Register files are good tools for computing because they have high bandwidth and very low latency, compared to memory references via data caches.Processor registers have a high thermal cost, and a stack machine might claim higher energy efficiency.[37] This slowdown is worst when running on host machines with deep execution pipelines, such as current x86 chips.The host machine's prefetch mechanisms are unable to predict and fetch the target of that indexed or indirect jump.[40][41] Since Java virtual machine became popular, microprocessors have employed advanced branch predictors for indirect jumps.[42] This advance avoids most of pipeline restarts from N-way jumps and eliminates much of the instruction count costs that affect stack interpreters.

Pushdown automatoncomputer sciencecomputer engineeringprogramming language implementationscomputer processorvirtual machinehardware stackprocessor registerspush-down automataTuring-completep-codeopcodelocal variablesformal parametersinstruction setreverse Polish notationcall-return stackpipelinedRobert S. BartonHigh-level language computer architectureKonrad ZuseBurroughs large systemsEnglish Electric KDF9Collins RadioRockwell CollinsXerox DaybreakUCSD PascalPascal MicroEngineICL 2900 SeriesHP 3000HP 9000HP FOCUSTandem ComputersmicrocontrollerRTX2010PSC1000Ternary computerbalanced ternaryIgniteCharles H. MooreSaab Ericsson Spaceradiation hardenedtransputersvirtualWhetstoneALGOL 60SmalltalkJava virtual machineWebAssemblyVirtual Execution SystemCommon Intermediate Language.NET FrameworkPostScriptSun MicrosystemsSun RaysmartcardActionScriptEthereumCPythonbytecodeRubiniusbs (programming language)Lua (programming language)The Open Networkhybrid computersPDP-11Motorola 6809M68000threaded codeIntel 8087global variablesElbrusNiklaus WirthPascalCDC 6000cactus stackSaguaroregistersvirtual machinesmemory addressesdata cachelive-variable analysiscommon subexpressionconcatenative languagesJava Optimized ProcessorTomasulo algorithminstruction-level parallelismout-of-order executionload–store architectureMicroprogrammedTandemAthlonDalvikAndroidsmartphonesbranch predictorsStack-oriented programming languageConcatenative programming languageComparison of application virtual machinesSECD machineAccumulator machineBelt machineRandom-access machineBarton, Robert S.Blaauw, Gerrit AnneBrooks, Jr., Frederick PhillipsAddison-Wesley Longman Publishing Co., Inc.University of WaterlooRandell, BrianAcademic PressComputerHyde, RandallNo Starch PressJohn L. HennessyDavid Andrew PattersonBurroughs CorporationHewlett-Packard JournalHewlett-Packard