Expected value

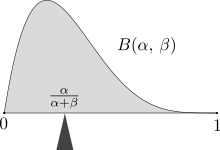

or E.[1][2][3] The idea of the expected value originated in the middle of the 17th century from the study of the so-called problem of points, which seeks to divide the stakes in a fair way between two players, who have to end their game before it is properly finished.Many conflicting proposals and solutions had been suggested over the years when it was posed to Blaise Pascal by French writer and amateur mathematician Chevalier de Méré in 1654.Méré claimed that this problem could not be solved and that it showed just how flawed mathematics was when it came to its application to the real world.They were very pleased by the fact that they had found essentially the same solution, and this in turn made them absolutely convinced that they had solved the problem conclusively; however, they did not publish their findings.In the foreword to his treatise, Huygens wrote: It should be said, also, that for some time some of the best mathematicians of France have occupied themselves with this kind of calculus so that no one should attribute to me the honour of the first invention.But these savants, although they put each other to the test by proposing to each other many questions difficult to solve, have hidden their methods.I have had therefore to examine and go deeply for myself into this matter by beginning with the elements, and it is impossible for me for this reason to affirm that I have even started from the same principle.But finally I have found that my answers in many cases do not differ from theirs.In the mid-nineteenth century, Pafnuty Chebyshev became the first person to think systematically in terms of the expectations of random variables.If I expect a or b, and have an equal chance of gaining them, my Expectation is worth (a+b)/2.More than a hundred years later, in 1814, Pierre-Simon Laplace published his tract "Théorie analytique des probabilités", where the concept of expected value was defined explicitly:[8] ... this advantage in the theory of chance is the product of the sum hoped for by the probability of obtaining it; it is the partial sum which ought to result when we do not wish to run the risks of the event in supposing that the division is made proportional to the probabilities.We will call this advantage mathematical hope.The use of the letter E to denote "expected value" goes back to W. A. Whitworth in 1901.In German, E stands for Erwartungswert, in Spanish for esperanza matemática, and in French for espérance mathématique.It is also very common to consider the distinct case of random variables dictated by (piecewise-)continuous probability density functions, as these arise in many natural contexts.Consider a random variable X with a finite list x1, ..., xk of possible outcomes, each of which (respectively) has probability p1, ..., pk of occurring.Since the outcomes of a random variable have no naturally given order, this creates a difficulty in defining expected value precisely.[14] In the alternative case that the infinite sum does not converge absolutely, one says the random variable does not have finite expectation.The density functions of many common distributions are piecewise continuous, and as such the theory is often developed in this restricted setting.Sometimes continuous random variables are defined as those corresponding to this special class of densities, although the term is used differently by various authors.To avoid such ambiguities, in mathematical textbooks it is common to require that the given integral converges absolutely, with E[X] left undefined otherwise.[19] Moreover, if given a random variable with finitely or countably many possible values, the Lebesgue theory of expectation is identical to the summation formulas given above.According to the change-of-variables formula for Lebesgue integration,[21] combined with the law of the unconscious statistician,[22] it follows thatThe above discussion of continuous random variables is thus a special case of the general Lebesgue theory, due to the fact that every piecewise-continuous function is measurable.This is intuitive, for example, in the case of the St. Petersburg paradox, in which one considers a random variable with possible outcomes xi = 2i, with associated probabilities pi = 2−i, for i ranging over all positive integers.There is a rigorous mathematical theory underlying such ideas, which is often taken as part of the definition of the Lebesgue integral.Due to the formula |X| = X + + X −, this is the case if and only if E|X| is finite, and this is equivalent to the absolute convergence conditions in the definitions above.The following table gives the expected values of some commonly occurring probability distributions.Markov's inequality is among the best-known and simplest to prove: for a nonnegative random variable X and any positive number a, it states that[37][39] The following three inequalities are of fundamental importance in the field of mathematical analysis and its applications to probability theory.For a different example, in decision theory, an agent making an optimal choice in the context of incomplete information is often assumed to maximize the expected value of their utility function.The law of large numbers demonstrates (under fairly mild conditions) that, as the size of the sample gets larger, the variance of this estimate gets smaller.This property is often exploited in a wide variety of applications, including general problems of statistical estimation and machine learning, to estimate (probabilistic) quantities of interest via Monte Carlo methods, since most quantities of interest can be written in terms of expectation, e.g.

Expected value (disambiguation)Exponential functionstatisticsProbability theoryProbabilityAxiomsDeterminismSystemIndeterminismRandomnessProbability spaceSample spaceCollectively exhaustive eventsElementary eventMutual exclusivityOutcomeSingletonExperimentBernoulli trialProbability distributionBernoulli distributionBinomial distributionExponential distributionNormal distributionPareto distributionPoisson distributionProbability measureRandom variableBernoulli processContinuous or discreteVarianceMarkov chainObserved valueRandom walkStochastic processComplementary eventJoint probabilityMarginal probabilityConditional probabilityIndependenceConditional independenceLaw of total probabilityLaw of large numbersBayes' theoremBoole's inequalityVenn diagramTree diagrammomentweighted averageintegrationmeasure theoryLebesgue integrationproblem of pointsBlaise PascalChevalier de MéréPierre de FermatChristiaan Huygens'theory of probabilityPafnuty Chebyshevrandom variablesPierre-Simon LaplaceW. A. Whitworthblackboard boldprobability density functionsrandom vectorrandom matrixequiprobableaveragearithmetic meanalmost surelyconvergestrong law of large numbersroulettecountably infinite setRiemann series theoremmathematical analysisconverges absolutelyprobability density functionreal number lineopen intervalintegralpiecewise continuousRiemann integrationCauchy distributionLebesgue integralmeasurable functionBorel setcumulative distribution functionabsolutely continuousLebesgue measurelaw of the unconscious statisticianimproper Riemann integralsSt. Petersburg paradoxpositive and negative partsHarmonic seriesprobability distributionsBernoulliBinomialPoissonGeometricUniformExponentialNormalStandard NormalParetoCauchyundefinedlinearinductionlinear form(a.s.)triangle inequalityindicator functionBernoulli random variableLebesgue-Stieltjesintegration by partsindependentdependentinner productConcentration inequalitiesMarkov's inequalityChebyshev's inequalitystandard deviationsKolmogorov inequalityJensen's inequalityconvex function